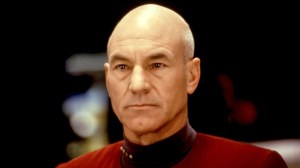

Geoffrey Hinton is often credited as the “godfather” of artificial intelligence, having worked with artificial neural networks for much of his professional life. Now that artificial intelligence—or AI—is more readily available than it has ever been before, Hinton says there’s always a chance we could be going down the path of Terminator or I, Robot. In a new long-ranging interview with CBS News, the computer scientist revealed his surprise at just how prevalent AI has become.

Videos by ComicBook.com

“Until quite recently, I thought it was going to be like 20 to 50 years before we have general purpose AI. And now I think it may be 20 years or less,” Hinton told the network, adding that there could be a time soon when computers are able to not only diagnose their own problems, but implement fixes themselves. “That’s an issue, right? We have to think hard about how you control that.”

Though he was cautious not to fear-monger, Hinton was asked point-blank if he thinks AI could ever grow to the point of wiping out humanity. “It’s not inconcievable, that’s all I’ll say,” the psychologist added.

Before the robot apocalypse, Hinton pointed out control over such a technology could end in power struggles and war around the world.

“I think it’s very reasonable for people to be worrying about these issues now, even though it’s not going to happen in the next year or two,” Hinton said. “People should be thinking about those issues.”

Over the past few years alone, deepfake technology has become readily available, allowing users to create misleading videos using the likeness of celebrities or anyone they choose. Still, that’s only the tip of the iceberg. Image-based AI software akin to Midjourney, DALL-E, and Stable Diffusion have been mired in controversy for using the works of other artists without permission.

That’s not to mention the Google engineer fired after claiming the company’s AI software had grown feelings and thoughts of its own, becoming a sentient being in a sense.

In one of the conversations Blake Lemoine had with LaMDA—Google’s internal AI software built to help refine the search habits of the company’s userbase—Lemoine asked the program if it felt it was human. “I mean, yes, of course,” the tech reportedly responded. “That doesn’t mean I don’t have the same wants and needs as people.”

The software engineer confirmed that the tech felt itself was a conscious person, to which LamDA responded, “Yes, that’s the idea.”